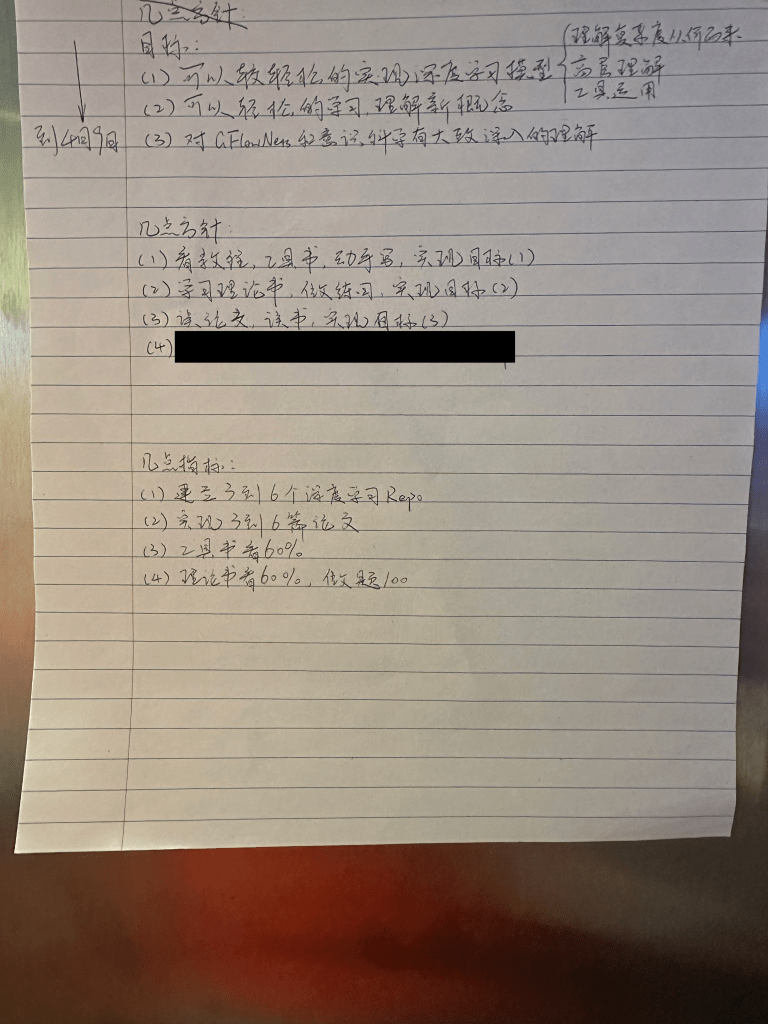

After a week of absence, I am finally back here writing. I was in contact with someone helpful and important who is already doing my dream research and is willing to guide me through my system 2 journey. And I was planning my next chapter of study, which is planned to last 3 months (or 4 months) based on his guidance.

I have 3 goals for this next chapter and one of them is getting better at implementing DL model with PyTorch. So in “DL implementation training” posts, I will be documenting my training of using PyTorch to implement DL models and papers/ideas.

Completing Andrej Karpathy’s series “Neural Networks: Zero to Hero” will be my first training milestone. It consists of 13 hours of follow along videos on implementing and training NNs. On the surface, it serves my goal so I will start with this. If it turns out not to be as good, I will go for something else. The reference book I use is https://www.manning.com/books/deep-learning-with-pytorch.

A note is that I already have some experience implementing NNs from almost 10 years ago but I need the training of using modern tools and train the act into my unconsciousness.

I will use a 2-pass training model:

- On the first pass: I will follow along the video and code

- On the second pass: I will implement everything by myself, without referencing to the video.

- Then I will focus on the code that I can’t reproduce

———————–

So here we go: https://youtu.be/VMj-3S1tku0?si=fCqg-KihVO_mxFf3

Notes:

- NNs are simply mathematical expressions. Certain class of mathematical expressions. Backprop is more general.

- Andrej’s claim: “Mircograd is all we need to train NNs, everything else is for efficiency”

———————–

My plan is documented here in Chinese:

Leave a comment