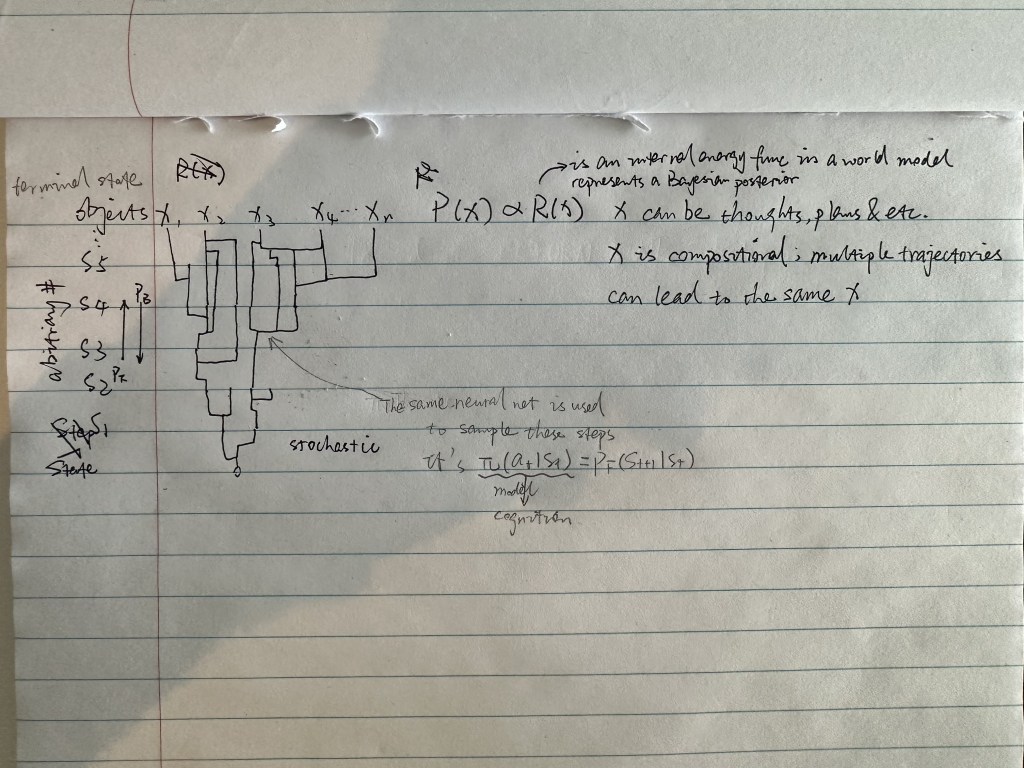

Note: updated mental map of GFlowNet

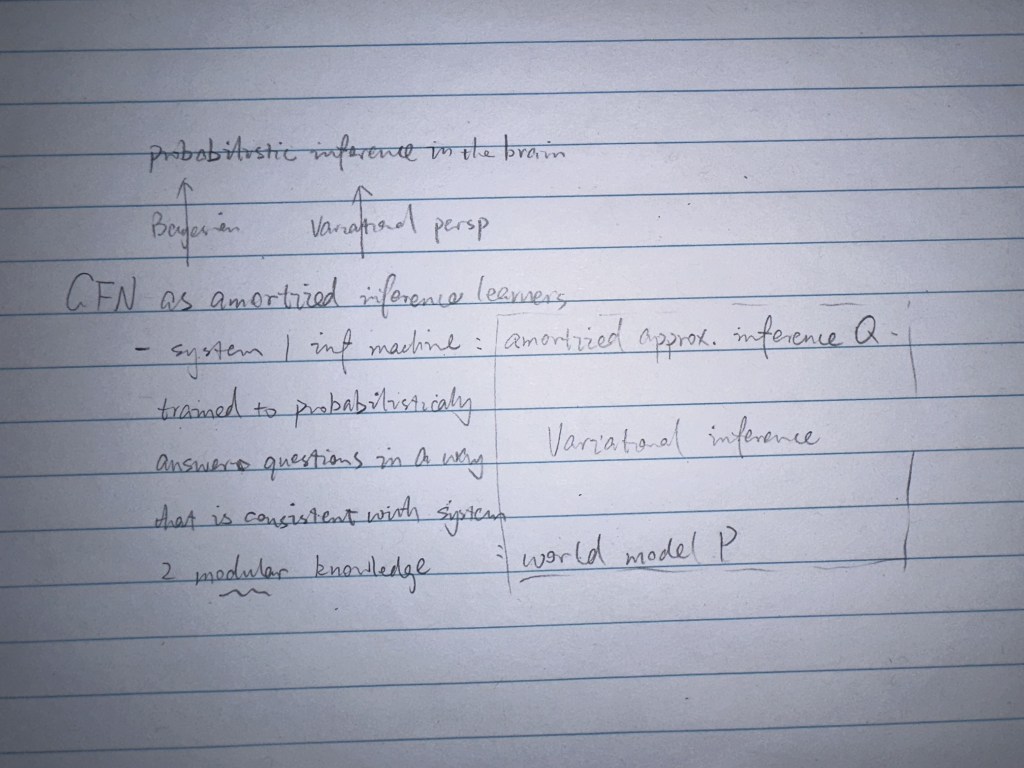

How does GFlowNet approximate human reasoning? (high level, from Bayesian and Variational inference perspectives)

Note: training GFlowNet

- inference machine Q helps train world model P

- Q can be trained with generated data to make Q consistent with P. The consistency is optimized using modern DL techniques during the training phase where Q makes inferences and the consistency between the inference results and knowledge in P is quantified and optimized.

- The generated data is the unlimited number of questions put together combinatorially that are used by Q to query P

- P, representing an explanation of the real-world, needs to be trained with real-world data

What does amortization mean in general CS and under the context of GFlowNet?

My answer before reading: amortization in CS means spreading the computational cost among a series of computations. An example can be an algorithm that does something everything it runs, which contributes to a final result. Amortization in GFlowNet means frontloading the computational cost of inference to the training phase.

Critique: my answer looks correct but isn’t. In CS and especially in ALGORITHM ANALYSIS, amortization means spreading the computational cost of ONE operation over MULTIPLE USES. It isn’t about the actual doing but rather the way of thinking.

So the interpretation of it in GFN is consistent with that in algorithm analysis: the cost of training is amortized over inferences.

But why is amortization emphasized? It’s inherent in ML.

TO_BE_ANSWERED.

My guess: this amortized learning aims at training, in the general sense, the inference machine to be good at performing inference tasks consistent with the world model. It isn’t about the training phase in ML but simply uses it.

Note: different angles of two articles

the blogpost scaling-in-the-service-of-reasoning-model-based-ml and the The-GFlowNet-Tutorial look at the same construct from two angles: first is the separation of inference machine Q and world model P. the second is about the inference machine itself.

Note: the essential difference between GFN and LLMs

This one sentence illustrates it:

If one is asked “how can I make fluffy brioche?”, the answer should be based on the relevant pieces of knowledge about baking, combined in a coherent way.

scaling-in-the-service-of-reasoning-model-based-ml

The combination of knowledge to form the answer reflects true understanding, albeit possibly false, as opposed to, in a reductionist sense, LLMs’ probabilistic generation of texts, which plays with the medium of thoughts instead of thoughts themselves.

Leave a comment